Approximately twelve million Americans, aged forty and over, live with vision impairment, including one million who are legally blind, according to the Centers for Disease Control and Prevention. Although some affected individuals can be treated with surgery or medication, and recent advances in gene and stem cell therapies are showing promise, no effective treatments exist for many people who are blinded by severe degeneration of, or damage to, the retina, the optic nerve, or the cortex. In such cases, an electronic visual prosthesis, or bionic eye, may be the only option. Such devices transform light, captured by a head-mounted camera, into electrical pulses that are delivered through a microelectrode array implanted in the eye or the visual cortex, which is then interpreted by the brain as visual perceptions, or phosphenes. Although current devices generally offer an improved ability to differentiate light from dark backgrounds and see motion, the vision they provide is blurry, distorted, and often hard to interpret.

A major challenge for scientists trying to develop visual prosthetics is thus to predict what implant recipients “see” when they use their devices. Instead of seeing focal spots of light, current retinal-implant users perceive highly distorted phosphenes that often fail to assemble into more complex objects of perception. Consequently, the vision generated by current prostheses has been widely described as “fundamentally different” from natural vision, and does not improve over time.

Michael Beyeler, an assistant professor of computer science and psychological and brain sciences at UC Santa Barbara, is taking a different approach. Rather than aiming to make bionic vision as natural as possible, he proposes to focus on how to create practical and useful artificial vision that would be based on artificial intelligence (AI)-based scene understanding and be tailored to specific real-world tasks that affect a blind person’s quality of life, such as facial recognition, outdoor navigation, and self-care.

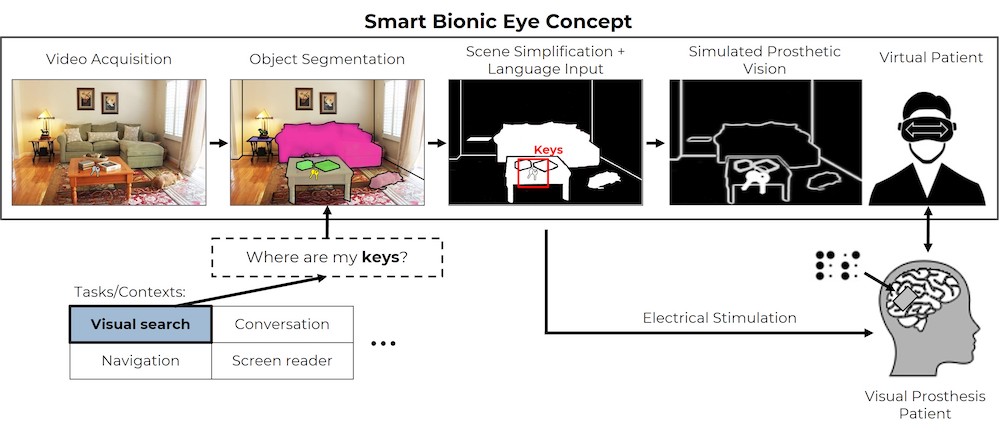

“We want to address fundamental questions at the intersection of neuroscience, computer science, and human-computer interaction to enable the development of a Smart Bionic Eye, a visual neuroprosthesis that functions as an AI-powered visual aid for the blind,” said Beyeler.

As part of his project, titled “Towards a Smart Bionic Eye: AI-Powered Artificial Vision for the Treatment of Incurable Blindness,” Beyeler will investigate the neural code of vision, studying how to translate electrode stimulation into a code that the human brain can understand. Seeing tremendous potential in Beyeler’s novel multidisciplinary approach, the National Institutes of Health (NIH) selected him to receive a prestigious NIH Director’s New Innovator Award. The five-year, $1.5 million grant was one of 103 awarded this week by the NIH, totaling more than $200 million, to enable exceptionally creative early-career scientists to push the boundaries of biomedical science and pursue high-impact projects that aim to advance knowledge and enhance health.

“I am tremendously honored and excited to be given this award,” said Beyeler, who has previously received the NIH Pathway to Independence Award. “As part of the NIH’s High-Risk, High-Reward Research program, this award will allow my group to explain the science behind bionic technologies that may one day restore useful vision to millions of people living with incurable blindness.”

Beyeler says that the funding will allow him to fund a postdoctoral researcher and several PhD students in his lab, and to gain access to visual prosthesis patients at eye clinics and hospitals throughout the U.S. and Europe.

“I offer my sincerest congratulations to Professor Beyeler for having his innovative research recognized with the prestigious NIH Director's New Innovator Award,” said Tresa Pollock, the interim dean of the College of Engineering and Alcoa Distinguished Professor of Materials. “His novel approach of using recent advances in computer vision, AI, and neuroscience has tremendous potential to uncover new knowledge and provide millions of people with useful vision through a smart bionic eye.”

To enable a technology that provides cues to the visually impaired, much as a computer vision system talks to a self-driving car, Beyeler must first understand how visual prostheses interact with the human visual system to shape perception. He said that a common misconception in the field is that each electrode in a device’s microelectrode array can be thought of as a pixel in an image, or a minute area of illumination on a display screen, and to generate a complex visual experience, one simply needs to turn on the right combination of pixels. His research shows, however, that the visual experience provided by current prostheses is highly distorted and unrelated to the number of electrodes.

“Current devices do not have sufficient image resolution to convey a complex natural scene. Hence, there is a need for scene simplification,” Beleyer said.

One way to simplify the visual scene and create useful artificial vision, according to Beyeler, is through deep-learning-based computer vision, which can be used to highlight nearby obstacles or remove background clutter. Computer vision is a field of AI that enables computers and systems to derive important information from digital images, videos, and other visual inputs — and take actions or make recommendations based on that information. Computer vision relies on cameras, data, and algorithms, rather than retinas, optic nerves, and a visual cortex.

“I envision a smart bionic eye that could find misplaced keys on a counter, read out medication labels, inform a user about people’s gestures and facial expressions during social interactions, and warn a user of nearby obstacles and outline safe paths,” explained Beyeler.

He said that his project will be patient-centric, involving people at all stages of the design process to test out his group’s theoretical predictions. The patients will be provided by his collaborators at four universities across the country and in Spain. Beyeler’s team will design experiments that probe an implant’s potential to support functional vision for real-world tasks involving object recognition, scene understanding, and mobility. This method strays from the typical vision tests performed in clinics that measure acuity, contrast sensitivity, and orientation discrimination.

Due to the unique requirements of working with bionic-eye recipients, such as constant assistance, setup time, and travel, experimentation remains time-consuming and expensive. Beyeler proposes an interim solution.

“A more cost-effective and increasingly popular alternative might be to rely on an immersive virtual reality (VR) prototype based on simulated prosthetic vision (SPV),” Beyeler explained.

The classical SPV method relies on sighted subjects wearing a VR head-mounted display (HMD). The subjects are then deprived of natural viewing and allowed to perceive only phosphenes displayed in the HMD. This approach enables sighted participants to “see” through the eyes of the bionic-eye user as they explore a virtual environment. Researchers can gain additional insight by manipulating the visual scene according to any desired image-processing or visual-enhancement strategy.

“The challenge in the field is less about dreaming up new augmentation strategies and more about finding effective visual representations to support practical, everyday tasks,” said Beyeler. “This is why, in my project, we utilize a prototyping system that allows us to explore different strategies and find out what works before implanting devices in patients.”

In the future, he said, the Smart Bionic Eye could be combined with GPS to give directions, warn users of impending dangers in their immediate surroundings, or even extend the range of visible light with the use of an infrared sensor, providing what he describes as “bionic night-time vision.” But before any of that can happen, Beyeler said, the fundamental scientific questions must be addressed.

“Success of this project would translate to a new potential treatment option for incurable blindness, which affects nearly forty million people worldwide,” said Beyeler, who plans to make all of his group’s software, tools, and deidentified data available to the scientific community. “Overall, this will be a fantastic opportunity for my lab to contribute substantially in the field of sight restoration and make a difference in the world.”

Caption: The "Smart Bionic Eye, a visual prosthesis, has the potential to provide visual augmentations through the means of artificial intelligence (AI)-based scene understanding, shown here for a visual search. For example, a user may verbally instruct the Smart Bionic Eye to locate misplaced keys, and the system would respond visually by segmenting the keys in the prosthetic image while the user is looking around the room.

Michael Beyeler, assistant professor of computer science and psychological & brain services